By the Numbers is a new semi-regular blog post I intend to do based on interesting (to me at least) numerical artifacts from the data at https://stadiastats.jdeslip.com that is used to produce our Sunday Stadia Stats posts and The Numbers Show content.

Let me preface this post by admitting that I’m personally not a fan of Luna. I’ve been irked that, so far at least, Amazon hasn’t defined a proper gaming platform (e.g. APIs for friends, true multiplayer, achievements, cloud features etc.) that developers can integrate into games. IMO, Luna is currently a step backwards for the cloud – back to the days of PC gaming before Steam and others came along to provide a uniform experience and platform niceties. Given my opinion, if you want to write this article off, you are welcome to! But, the raw numbers, at least, are what they are.

About Tracking App Reviews and Downloads

App stores, e.g. the Apple App Store and the Google Play store, only tend to update app download numbers on the days that apps pass major milestone. For example, on Google Play, app download counts are only updated when an app passes 100, 500, 1,000, 5,000, 10,000, 50,000 etc. downloads. However, these same stores update review counts for every App review that comes in. Analysts (and posers like myself) have figured out that the download count is often correlated to the review count. Without loss of generality, we can write the relationship as:

D(R)=a(R)*R

Here D is the number of downloads as a function of R, the number of reviews. a(R) is some unknown app-dependent function. Now, what I and others do is assume that a(R) is a slowly varying function of R. So that for example, a(5,000) ~ a(1,000). This appears to be true in practice for many applications. For example, for the Stadia app: a(500,000) = 96 and a(1,000,000) = 104. For the GamePass app: a(5,000,000) = 55 and a(10,000,000) = 64. For GeForce Now it actually stayed nearly identical: a(1,000,000) = a(5,000,000) = 137.

Getting Back to Luna

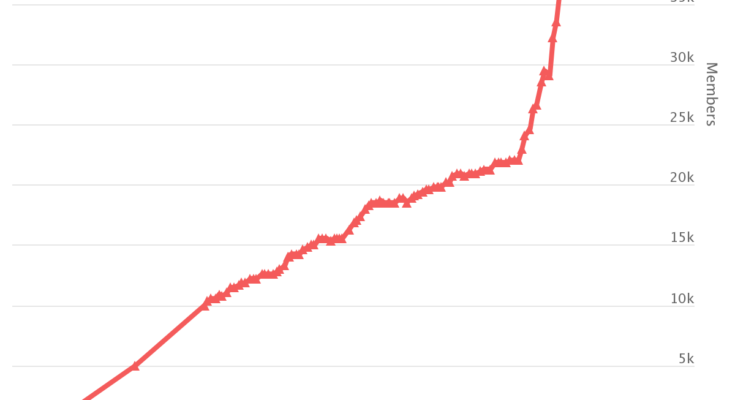

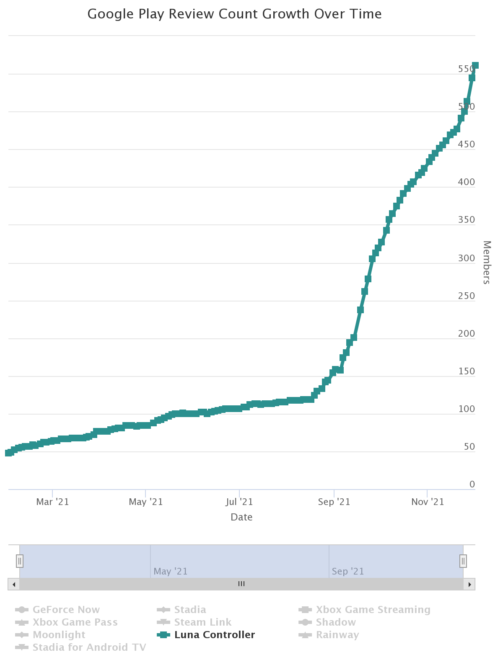

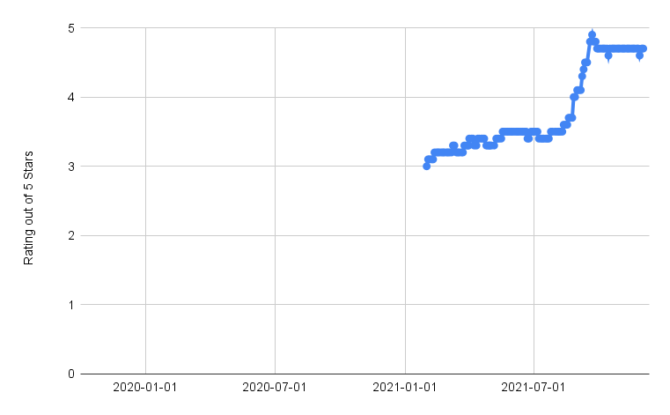

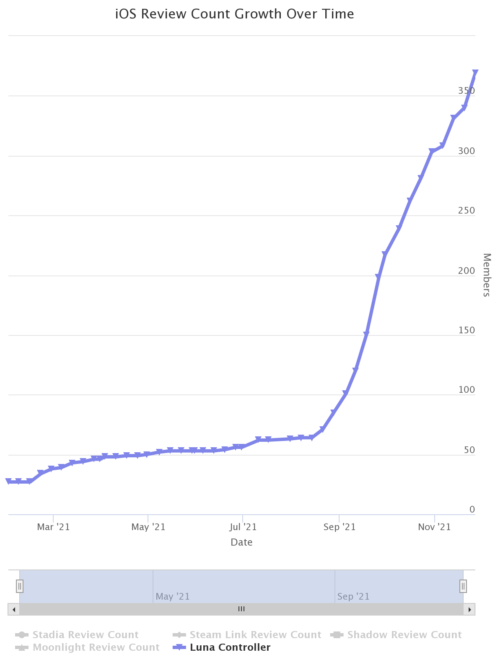

What does all this have to do with Luna? Getting there now! I track the iOS and GPlay review counts for a number of cloud gaming platforms. The Luna controller app passed the 10,000 download mark back in February when it had 54 reviews, yielding an a(10,000) = 185 – a seemingly reasonable ratio of downloads to reviews. The review count continued to grow fairly slowly but consistently after this date until around august, where the growth rate significantly increased (by ~10x) and the review score quickly went from 3.4 stars to 4.7 stars (with most of the new reviews being 5 stars).

Interestingly, the uptick in reviews doesn’t appear to be correlated with any Luna news. It started well after Prime day and well before Luna’s remote “couch” play was announced. Despite the “beta” or “early access” label, Amazon Luna seems to have, for practical purposes, been available for anyone who wanted to try it in the US for a long time.

There was a similar uptick in the review rate for the iOS app starting in August:

However, no other Amazon Luna growth metric has had a similar uptick in growth. Nothing much changed in the growth rate for other metric – including Twitter followers, instagram followers, YouTube subscribers, (unofficial) SubReddit members, Facebook group membership etc.

So, I’ve been a bit puzzled and anxious to see if the actual download count would match the growth in reviews. Using a constant a(R) of 185, here is how I have been projecting the download count for the Luna app:

Based on the review activity, one would predict that the app would have passed 50,000 downloads months ago. The above plot, however, is flat at 49,999 since September – a reflection of the reality that the Google Play Download count still reads 10,000. I.e. actual downloads have not kept up with the number of reviews.

At this point it is clear that an a(R) value of 185 no longer holds. In fact, the highest value that a(R) can possibly be at this point (if the app were to officially pass 50,000 downloads tonight) is 89 – a reduction in the ratio of downloads to reviews of more than 2x since the last milestone.

a(R) could potentially be as low as 30 or 40 (and falling over time). Amazon certainly has a track record of getting users to create reviews, and many apps actively seek positive reviews from within their app experience. App reviews are often flooded for all sorts of valid-ish reasons, and we’re also dealing with statistics of relatively small numbers here. So, I’m certainly not saying anything fraudulent is going on. But, it is “unusual,” and I think it is fair to say that the review rate for the Amazon Luna mobile apps likely do not reflect downloads, and the score itself may not reflect organic reviews.